EECS 477 Notes

Table of Contents

- 1. Final Exam Prep

- 2. 2015-12-04 Friday

- 3. 2015-12-02 Wednesday

- 4. 2015-11-30 Monday

- 5. 2015-11-23 Monday

- 6. 2015-11-20 Friday

- 7. 2015-11-18 Wednesday

- 8. 2015-11-16 Monday

- 9. 2015-11-11 Wednesday

- 10. 2015-11-09 Monday

- 11. 2015-11-06 Friday

- 12. 2015-11-04 Wednesday

- 13. 2015-11-02 Monday

- 14. 2015-10-30 Friday

- 15. 2015-10-28 Wednesday

- 16. 2015-10-26 Monday

- 17. 2015-10-21 Wednesday

- 18. 2015-10-16 Friday

- 19. 2015-10-14 Wednesday

- 20. 2015-10-12 Monday

- 21. 2015-10-09 Friday

- 22. 2015-10-07 Wednesday

- 23. 2015-10-05 Monday

- 24. 2015-10-02 Friday

- 25. 2015-09-30 Wednesday

- 26. 2015-09-28 Monday

- 27. 2015-09-25 Friday

- 28. 2015-09-23 Wednesday

- 29. 2015-09-21 Monday

- 30. 2015-09-18 Friday

- 31. 2015-09-16 Wednesday

- 32. 2015-09-14 Monday

- 33. 2015-09-11 Friday

- 34. 2015-09-09 Wednesday

- 35. 2015-09-02 Wednesday

- 36. 2015-08-31 Monday

- 37. 2015-08-28 Friday

- 38. 2015-08-26 Wednesday

- 39. 2015-08-24 Monday

1 Final Exam Prep

As discussed on Friday, the final exam will cover topics from the second half of the class. As prep work, I will put here a general listing of topics from the second half, as well as internal links to notes about them.

Topics (most recent first):

- Paging: First Day, Second Day

- Amortized Analysis: First Day (mostly introduction), Second Day

- Max Cut: Lecture. The second day was Wednesday before Thanksgiving; was not present.

- Maximum Satisfiability: First Day, Second Day

- Hitting Set: First Day (definition, 2 algorithms), Second Day (repeated execution and analysis of combined algorithm).

- Game Tree Evaluation: First Day, Second Day, Third Day

- Two Person Zero Sum Games: First Day, Second Day, Third Day

- Game Theory Intro: First Day, Second Day, Third Day

- Mixed Strategies (and the penny game)

- Strategic Form

- Behavioral/Extensive Form

- MCNF (some of this was before midterm, but oh well):

- First Day (definition, high level stuff)

- Second Day (transformations, residuals, LP, unimodularity)

- Third Day (duality)

- Fourth Day (duality again)

- Fifth Day (applications to shortest paths, dynamic programming, circulation)

- (all MCNF above this point was "pre-midterm", now for "post-midterm", i.e. the algorithms)

- Sixth Day (assumptions of algorithms, SSP)

- Seventh Day (Runtime of SSP, Capacity Scaling Algorithm)

- Eighth Day (CS algorithm, plus runtime)

- Max Flow == Min Cut

2 2015-12-04 Friday

2.1 Paging Continued

- \(n\) pages in process, main memory holds \(k

- Process makes requests for pages, if not in main memory, we need to read from disk and maybe evict a page from main memory.

- Need to come up with an strategy for evicting pages that will minimize page faults.

- If we knew all the page requests ahead of time, there would be a relatively simple algorithm that could be applied to minimize page faults.

- Problem: we don't know page requests ahead of time. So we can think of this as an adversarial game.

- When \(k

- So, it seems like there's not much we can do with a deterministic algorithm for the general case.

We would like to come up with an approximation algorithm and do an amortized analysis.

- \(c(G)\): number of page faults of algorithm \(G\) on sequence \(\sigma\).

- OPT: optimum page replacement strategy on \(\sigma\).

- \(c(G) \le \alpha + c(OPT) + \beta \:\:\: \forall \sigma \)

- Need an online algorithm (i.e. it has no future knowledge). OPT has the advantage of future knowledge.

2.1.1 Marking Algorithm

- Associates a bit with every page in main memory. Initially, all \(k\) slots are unmarked.

- When page \(p\) is requested:

- if \(p \not\in\) main memory:

- if all pages are marked, unmark all

- evict any unmarked page

- replace it with \(p\)

- mark p

- if \(p \not\in\) main memory:

Example: \(k = 3\)

Initial main memory (none are marked):

1 2 3 Request page 4. 2 is arbitrarily chosen to be evicted

1 4* 3 Request page 2. 1 is arbitrarily chosen to be evicted

2* 4* 3 Request page 4. Nothing changes

2* 4* 3 Request page 1. 3 is the only page that can be evicted.

2* 4* 1* Request page 3. All pages must be unmarked, and then 4 is arbitrarily chosen to be evicted.

2 3* 1

Ok. Looks pretty darn simple. This marking algorithm tries to emulate LRU (Least Recently Used), but with only one bit of memory to store how recently used. Or you could think of it the other way, that M is a generalization of LRU, because M has a choice to evict "any" page, and LRU specifies that the page it evicts is the least recently used.

For the purpose of analysis, we will define a "Phase". P0 is at the start. Pi is a maximal subsequence of requests containing \(k\) distinct pages.

Theorem: \(c(M) \le k c(OPT) + k\)

Proof: In each phase, \(M\) incurs no mare than \(k\) page faults (once a page is marked, it stays in main memory for the rest of the phase).

For the purpose of analyzing the optimal strategy, we will call the first requested page of phase \(q\). We will define \(Q\) to be the remaining page requests of the phase, plus the next one. \(Q\) contains exactly \(k\) distinct pages that are not equal to \(q\). By the end of \(Q\), OPT will have to incur a page fault (because \(q\) is in main memory, and it's impossible to have all \(k\) of the other distinct pages in \(Q\)).

The sequence of page requests will contain some number \(m\) of complete phases, followed maybe by an additional \(\sigma\), or maybe not. So there are \(m\) complete phases for the marking algorithm, and in the worst case there are \(m-1\) regions \(Q\). In each phase, the M algorithm incurs cost \(\le k\) while the optimal incurs cost \(\le 1\). In the final phase, the M algorithm incurs cost \(\le k\), while the optimal may or may not incur any cost.

So, we can easily see that \(c(M) \le k c(OPT) + k\)

Paging has tons of interesting "confluences" of concepts from this class:

- primal dual algorithms

- randomized algorithms

- amortized analysis

- Yao's principle

- more

Sadly, we didn't get a chance to cover it all.

2.2 Final

He will be asking questions about the second part of the course (after the midterm). You will be pretty screwed if you don't know LP anymore, but there won't be any explicit questions on the content of the first half. The exam will be open book and note (!!). Question types:

- Explain (insert some topic/algorithm/proof).

- Open book, so he may ask questions about the proof to show that you do understand the proof, and aren't just reading the proof for the first time.

- Simple problems - as in do thing x.

- Homework problems may be asked verbatim! This could be a "personalized" strategy, so you should look at what you did wrong in the homework and make sure you understand what you did wrong.

The rules about what you can bring are mostly guidelines, cause you'll be solving problems in front of (and with) him.

3 2015-12-02 Wednesday

3.1 Amortized Analysis (continued)

3.1.1 Aggregate Analysis

We were doing amortized analysis of the binary counter. Over all 2n increments, you'll get the following costs:

- Cost 1 for 2n-1 increments

- Cost 2 for 2n-2 increments

- Cost 3 for 2n-3 increments

- …

- Cost n for 2n-n (=1) increments

So the average cost is:

\begin{align*} \text{avg. cost} = \frac{1}{2^n} \sum_{i=1}^n 2^{n-i} i \end{align*}Actually, the cost n action happens twice. Let's do this in terms of bits:

- Bit 1 examined 2n times

- Bit 2 examined 2n-1 times

- …

- Bit n examined 1 (+1) times

So the total and average costs are:

\begin{align*} \text{total cost} &= 1 + \sum_{i=0}^n 2^i = 2^{n+1} - 1 + 1 = 2^n+1 \\ \text{average cost} &= \frac{2^{n+1}}{2^n} = 2 \end{align*}3.1.2 Accounting Method

We associate costs with actions on the data structure.

- When you increment the counter from 0, you travel to the first bit, and flip it. You leave a "candy". Your cost is 1 bit traveled plus 1 candy placed = 2.

- When you increment from 1 to 2, you travel to the first bit and flip it to zero, and eat that candy. Then, you travel to the second bit, flip it to one, and leave a candy there. Your cost is 2 travel, 1 candy placed, and 1 candy eaten, for a total of 2.

- When you increment from 2 to 3, you travel to the first bit, flip it to one, and leave a candy. Your cost is 1 travel, 1 candy, for a total of 2.

- When you increment from 3 to 4, you travel to the first bit, flip it to 0, eat the candy. Same thing at the second. Finally, at the third, you flip it to one, and leave a candy. Your cost is 3 travel, 1 candy placed, and 2 candy eaten, for a total of 2.

This pattern continues: no matter whan increment you do, you will always have a cost of (at most) 2, which happens to be the same as we got above.

How does this work? The idea is that when you flip a bit to 1, you also account for the fact that later on, you will be flipping it to zero (this is the act of leaving a candy). Then, when you actually do that operation later on, you "eat the candy", or in other words you don't have to pay that cost. This is actually really cool.

3.1.3 Potential Function Method

- Data structure Di: state after $i$th operation.

- Φ: maps state of data structure → real numbers. This is a potential function.

- ci: cost of $i$th operation

The total cost of all operations on the data structure (if there are \(m\) operations) is simply:

\begin{align*} \text{total cost} = \frac{1}{m} \sum_{i=1}^m c_i \end{align*}Now, we define \(\hat{c}_i\), to be the "amortized" cost of the \(i\)th operation. We define it as:

\begin{align*} \hat{c}_i = c_i - \Phi(D_{i-1}) + \Phi(D_i) \end{align*}The sum of the amortized costs is:

\begin{align*} \sum_{i=1}^m \hat{c}_i &= \sum_{i=1}^m (c_i - \Phi(D_{i-1}) + \Phi(D_i)) \\ &= \sum_{i=1}^m c_i - \Phi(D_0) + \Phi(D_m) \\ &\ge \sum_{i=1}^m c_i \end{align*}The last inequality holds whenever \(\Phi(D_m) \ge \Phi(D_0)\). By convention, most papers set \(\Phi(D_0) = 0\), and ensure that \(\Phi(D_m) \ge 0\) at all times.

With this formulation, we can come up with any potential function we like (that satisfies the above condition), and then sum up the amortized costs instead of the actual costs, and get a bound on the total cost.

In the binary counter, we let the potential function be the number of 1's in the counter. We can see that the value of \(\hat{c}_i\) is always 2.

In this case, the potentials are pretty much the same as the candies in the Accounting Method. This is not always the case; you can view the potential method as more general than the accounting method, because it can be used in situations where there's no clear way to "assign candies" in strategic locations like we did earlier.

3.2 Paging Problem

This problem has to do with memory management in operating systems.

- We have space in main memory for \(k\) pages.

- We have a process that has a working set of \(n\) pages.

- The \(n-k\) pages that are not stored in main memory are stored safely on disk.

We're going to view the process as an adversary that requests pages out of its working set. If the OS has it in main memory, nothing needs to happen. Otherwise, it should be loaded from the disk into main memory. The other problem is that the OS will (probably) have to evict a page from main memory to make room for the page.

The OS would like to maximize the number of hits, or minimize the number of page faults (where it loads the page from disk).

Our worst case analysis would be simple - in the worst case, there is a page fault on every request, so we have a cost of 1 disk access for every page request.

We can see that a randomized algorithm could prevent the worst case for a process. But maybe we'd like to do an amortized analysis for a deterministic algorithm. Next time we'll talk about that.

4 2015-11-30 Monday

4.1 Homework 5 recap

Here is a nicer overview of the steps from the capacity scaling algorithm, for when you're actually stepping through it (instead of the algorithm as presented earlier, which is a bit difficult to interpret at times).

For each delta scaling phase:

- Copy over the residual network (nodes and arcs).

- Copy over the node potentials (π) from the previous iteration, as well as the reduced costs, upper bounds, and excesses.

- Any new edges (not in the previous residual) should be drawn, and have

their reduced costs computed. Chances are, some of these will have

negative reduced costs.

- You do arc reversal on those arcs, which will change the excesses. This is a good time to redraw the residual. (at least, this is when Dr. Liberatore redraws it).

- Select an excess and deficit vertex to do an augmentation on. You will

then recompute the node potentials (π) and the reduced costs (cij^π).

Also make sure to add in the reversed edges.

- Repeat this until all your excesses and deficits are less than Δ.

It seems like the other thing I missed was that you should assume that there is a path from 1 to 8, of very large cost. In his example (which he started last class), it looks like he made the cost 15. I'll have to get some input from Jeff on how he did that in class last Wednesday.

4.2 Amortized Analysis

Binary counter: suppose you have an \(n\) bit counter. Initially, it is set to all 0's. We will pretend this counter is a data structure that supports only one operation, which is increment. This does your typical increment operation (flip the bit and carry if it was 1). An increment operation has a cost defined by the number of bits you flip.

The worst case cost for an operation is going to be \(n\). However, it looks like most of your costs are going to be much smaller. Half of them will have cost 1. So amortized analysis will be a way of doing worst-case analysis that involves finding the cost over an entire sequence of operations.

5 2015-11-23 Monday

5.1 Max Cut

- Graph \(G=(V, E)\)

- Def: A cut is a partition of \(V\) into \(S\) and \(V-S\).

- Def: An edge \(e = \{u,v\}\)\) crosses cut \(S, V-S\) iff \(u \in S\), \(v \not\in S\).

- Let \(a_{ij}\) be the weight (≥ 0) associated with edge \(\{i,j\}\).

- The problem is to find a cut that maximizes \(\sum_{i,j\text{ crossing cut}} a_{ij}\)

This problem is NP-hard, so we'd like to find an approximation algorithm. Note a few things. First, the max cut for a bipartite graph is just the cut that separates the two sets of edges. Also, in general, we can assume that we are working with the "complete" graph, and that the edges are 0 if there was no edge in the original graph.

First Claim: there is a randomized algorithm with an approximation ratio of \(\frac{1}{2}\).

This algorithm simply assigns each node to \(S\) with probability \(\frac{1}{2}\). The probability that an edge crosses the cut is \(\frac{1}{2}\). So the expected probability is \(\frac{1}{2} \sum a_{ij}\), which is greater than or equal to half the maximum possible optimal (\(\sum a_{ij}\)).

Now, let's look at the integer linear program. First, our variables:

\begin{equation} x_i = \left\{\begin{array} +1 & \text{ if } i \in S \\ -1 & \text{ if } i \not\in S \\ \end{array}\right. \end{equation}Here's the "program":

\begin{align*} \max \sum_{iThis program is a bit of a downer because it's quadratic, and linear. We're gonna stick with it, We're going to relax the integer condition on x, in a very weird way.

Say that instead of \(x\) being scalar, we used \(\vec{v}\), a vector on the unit sphere in \(R^n\). If they are opposite each other, then the dot product will be -1, and if they are the same, the dot product will be 1. And we'll also relax the "integer" constraint on the \(\vec{v_i}\).

Okay. Let's now say we have \(B = (v_1, v_2, \dots, v_n\). Let \(y = B^T B\):

\begin{equation} y = B^T B = \begin{bmatrix} v_1^T \\ v_2^T \\ \vdots \\ v_n^T \end{bmatrix} \begin{bmatrix} v_1 & v_2 & \cdots & v_n \end{bmatrix} = \begin{bmatrix} v_1 \cdot v_1 & v_1 \cdot v_2 & \cdots & v_1 \cdot v_n \\ v_2 \cdot v_1 & v_2 \cdot v_2 & \cdots & v_2 \cdot v_n \\ \vdots & \vdots & \ddots & \vdots \\ v_n \cdot v_1 & v_n \cdot v_2 & \cdots & v_n \cdot v_n \\ \end{bmatrix} \end{equation}So now our objective is \(\max \sum_{i\lt j} a_{ij} \frac{1 - y_{ij}}{2}\). I guess we need that the matrix is positive semidefinite. So I guess we have a semidefinite program. We can solve this SDP to within some \(\epsilon\) in time polynomial in \(n\) and \(\log \epsilon\). Since ε is a constant here, we're good. This will give us vectors \(v_i^*\).

Our job will be to separate the vectors into two groups. Turns out we'll just select a random hyperplane. Woo randomness.

6 2015-11-20 Friday

6.1 MAX SAT

Recall:

- "Maximum Satisfiability"

- \(n\) boolean variables, \(x_1, \dots, x_n\).

- \(p\) clauses, \(c_1, \dots, c_p\)

- \(c_i = x_1 \lor \bar{x_2} \lor x_3\) (for example)

Continuing on:

- Let \(k(i)\) be the number of literals in clause \(i\).

- Let \(S_i^+ =\) the set of \(x\) variables that are not negated.

- For the example \(c_i\) above, \(S_i^+ = \{x_1, x_3\}\)

- Let \(S_i^- =\) the set of \(x\) variables that are negated.

- For the example \(c_i\) above, \(S_i^+ = \{x_2\}\)

In max sat, we want to maximize the number of clauses that are satisfied. Our initial randomized algorithm (which we applied when \(k(i) \ge k = 2\)) assigned each variable a value with probability \(\frac{1}{2}\). This gave a \(\frac{3}{4}\) approximation algorithm, which is tough to beat. But the performance is bad when \(k=1\).

So now we'll examine an algorithm based on a linear program. Let's use the following notation:

\begin{equation} y_j = \left\{\begin{array}{ll} 1 & \text{ if } x_j = T \\ 0 & \text{ otherwise} \end{array}\right. \end{equation}Now, here is the working ILP formulation:

\begin{align*} \max \sum_{i=1}^p z_i & \text{ s.t.} \\ \sum_{j \in S_i^+} y_j + \sum_{j \in S_i^-}(1-y_j) &\ge z_i \\ 0 \le z_i &\le 1 \\ y_j &\in \{0, 1\} \\ \end{align*}Our \(z_i\) are variables such that:

\begin{equation} z_i = \left\{\begin{array}{ll} 1 & \text{ if } c_i = T \\ 0 & \text{ otherwise} \end{array}\right. \end{equation}When we do the LP relaxation, \(y_i\) can range from 0 to 1. Then, our randomized algorithm will have \(Pr(x_j = T) = y_j^*\). So, the probability that a clause is satisfied is:

\begin{align*} Pr(C_i = T) &= 1 - \left(\prod_{j \in S_i^+} (1-y_j^*)\right) \left(\prod_{j \in S_i^-} y_j^*\right) \\ &\ge \left(1 - \left(1 - \frac{1}{k(i)}\right)^{k(i)}\right)z_i^* \\ &\ge \left(1 - \frac{1}{e}\right)z_i^* \end{align*}So, the expected number of clauses satisfied is \(\sum_{i=1}^p \left(1 - \frac{1}{e}\right)z_i^*\). The best you can do is \(\sum_{i=1}^p z_i^*\), so the approximation ratio is \(\left(1 - \frac{1}{e}\right) \approx 0.63\).

This isn't as good as the original algorithm, which had an approximation ratio of 0.75. But it deals better with the cases of small \(k\). Of course, the downside is that we have to solve a linear program. Which kinda stinks. So our best algorithm is just to run both and pick the best.

OK, what can we say about the expected performance of this "best" algorithm? Well, the algorithm has a performance of \(\max\{n_1, n_2\}\), where the two \(n\) variables are the performance of the two algorithms. Expectations of max's are very hard, so we will just say that:

\begin{align*} \max\{n_1, n_2\} &\ge \frac{n_1 + n_2}{2}\\ &= \frac{1}{2} \left(\sum_{i=1}^p \left(1 - \frac{1}{2^{k(i)}}\right) \sum_{i=1}^p \left(1 - \left(1 - \frac{1}{k(i)}\right)^{k(i)}\right)z_i^*\right) \\ &\ge \frac{1}{2} \sum_{i=1}^p z_i^* \left(1 - \frac{1}{2^{k(i)}}\right) \left(1 - \left(1 - \frac{1}{k(i)}\right)^{k(i)}\right)\\ &\ge \frac{1}{2} \sum_{i=1}^p z_i^* \frac{3}{2} \\ &= \frac{3}{4} \sum_{i=1}^p z_i^* \\ \end{align*}That step up above where we replaced the big stuff with \(\frac{3}{2}\) can actually be proven easily by induction on \(k\). But it's pretty darn cool result, because it's not true in general that the expectation of a max operation is just the max of the respective expectation. It works out this way because the two algorithms make up for each other's weaknesses (k=1).

7 2015-11-18 Wednesday

7.1 "Math Facts"

Fact #1:

\begin{equation} 1 - x \le \left(1 - \frac{x}{k}\right)^k \le e^{-x} \:\:\: \forall x \in R, \forall k \in Z^+ \end{equation}- in the limit as \(k\to\infty\), the right side is equality.

- when \(k=1\), the left side is equality.

Fact #2:

Let \(x_1, x_2, \dots, x_n \in [0,1]\), s.t. \(\sum_{i=1}^n x_i \ge \zeta \ge 0\). Then:

\begin{equation} \prod_{i=1}^n (1-x_i) \le \left(1 - \frac{\zeta}{n}\right)^n \end{equation}We'll "sketch" the proof without fully doing it. Let's look at the case \(n=2\), \(\zeta=1\). So, we have:

- \(x_1 + x_2 \ge 1\)

- Or, \(x_2 \ge 1 - x_1\).

The expression we're interested in is:

\begin{align*} (1 - x_1)(1 - x_2) \le x_2(1-x_2) = f(x_2) \end{align*}You can maximize \(f\) by looking at its derivative, which will give you \(x_2 = \frac{1}{2} = x_1\). So, plugging that back in:

\begin{align*} (1 - x_1)(1 - x_2) \le x_2(1-x_2) \le \left(1 - \frac{1}{2}\right)^2 \end{align*}Tada! You can do this generally by induction on \(n\), but we won't.

7.2 Back to Hitting Set

Here's the ILP relaxation of the hitting set problem:

- \(\min \sum_{e \in E} c_e x_e\), (we'll call this \(c(x)\)) s.t.

- \(\sum_{e \in T_i} x_e \ge 1\)

- \(0 \le x_e \le 1\)

Earlier, we interpreted \(x_e\) as \(Pr[e\in A]\). Then, we defined a randomized algorithm to "solve" this problem. Except it didn't solve it, because its solutions weren't necessarily feasible. But hey, it performed well! So, let's look at the probability that this algorithm produces an infeasible solution. We'l start by looking at the probability that a single set \(T_i\) isn't "covered":

\begin{align*} Pr[T_i \cap A \ne \emptyset] &= 1 - Pr[\text{any member is in A}] \\ &= 1 - \prod_{e \in T_i} Pr[e \not\in A] \\ &= P - \prod_{e \in T_i} (1 - x_e^*) \end{align*}For each \(T_i\), we have \(\sum_{e \in T_i} x_e^* \ge 1\). Which means that we can say:

\begin{align*} Pr[T_i \cap A \ne \emptyset] &= P - \prod_{e \in T_i} (1 - x_e^*) \\ &\ge 1 - \left(1 - \frac{1}{|T_i |}\right) \\ &\ge 1 - \frac{1}{e} > 0 \end{align*}Now, suppose we repeat the randomized algorithm \(t\) times. Now, we know that \(e\in A\) iff \(\exists\) one trial in which \(e\in A\). Looking at the expressions above, we know that then:

\begin{align*} Pr[T_i \cap A = \emptyset] \le \frac{1}{e^t} \le \frac{1}{cp} \end{align*}That last bit holds if we choose a \(t \in \Theta(\log p)\). Now, let's define an indicator random variable \(\gamma_i\) which is 1 when \(T_i\) is not covered. Since it's an indicator RV, its expectation is just the probability of its event, which means:

\begin{align*} E[\gamma_i ] \le \frac{1}{cp} \end{align*}Now, we can talk about the expected number of sets not hit after \(t\) trials:

\begin{align*} E[\sum_{i=1}^p \gamma_i ] \le \frac{1}{c} \end{align*}This is true due to the linearity of expectation. It is not true that the \(\gamma_i\) are independent.

So, repeating the algorithm can allow us to bound the number of sets not covered and make it arbitrarily small. But, this ability comes at a cost. Let's look at the expected cost of the new solution:

\begin{align*} E[c(A)] = t * c(x^*) \le t* \text{OPTIMAL} \end{align*}This leads to the theorem that this repeated randomized algorithm is an \(O(\log p)\)-apx algorithm, and it returns a hitting set with probability \(\ge 1 - \frac{1}{c}\).

Let's summarize our algorithms for Hitting Set:

- Primal/Dual algorithm: apx \(\max |T_i |\) (for vertex cover, this is 2)

- both simple and deterministic

- Repeated Randomized algorithM: apx \(O(\log p)\)

- also simple, but randomized and has a nonzero probability of failure.

7.3 Maximum Satisfiability

- Have \(n\) decision variables, \(x_1, x_2, \dots, x_n \in \{T, F\}\).

- Have \(c_i\), a bunch of clauses over \(x_1, \dots, x_n\).

- For example, this could be \(x_1 \lor x_2\), \(x_1 \lor \lnot x_3\), or \(\not x_1 \lor x_2 \lor \lnot x_3\).

- In general, a clause may only be a disjunction of variables (or their negations).

- We would like to find a truth assignment to \(x_1, x_2, \dots, x_n\) that maximizes the number of true clauses.

Let's imagine right now that each clause contains \(\ge k = 2\) literals. There is an incredibly easy approximation algorithm here: just assign \(x_i = T\) with probability \(\frac{1}{2}\). The probability of a clause not being satisfied is less than or equal to \(\frac{1}{4}\). The expected number of clauses being satisfied is \(\frac{3}{4}p\), (where \(p\) is the number of clauses), and you can't do better than \(p\). So this is a \(\frac{3}{4}\) apx algorithm. But it's not so good when \(k\) is 1.

8 2015-11-16 Monday

8.1 Hitting Set

- Given \(E\), \(c_e \ge 0 \:\: \forall e \in E\), \(T_1, T_2, \dots, T_p \subseteq E\)

- Find \(A \subseteq E\) w/ min \(c(A) = \sum_{e \in A} c_e\)

- such that \(A \cap T_I \ne \emptyset, \:\: (i=1,2,\dots,p)\)

- An example of this problem is vertex cover.

An ILP for this:

- \(x_e\) is 1 if \(e \in A\), or 0 otherwise.

- Minimize \(c_{opt}\) subject to constraints based on the \(T\) sets.

The linear relaxation would allow \(x_e\) to take any value between 0 and 1. The dual involves some variables \(y_i\) for \(i=1,2,\dots,p\). Complementary slackness conditions:

- \(y_i \left(\sum_{e \in T_I} x_e - 1\right) = 0\)

- \(x_e \left(\sum_{i,e\in T_i} y_i - c_e \right) = 0\)

- And we want our solutions to be integral.

Unfortunately, this problem is not unimodular, so we can't expect all the conditions to be satisfied. So I guess we're just going to try to enforce the bottom two conditions. Furthermore, there is something we get when we apply integrality to the second CS condition. I guess it's nice cause we know that the value of \(x\) must be 0 or 1, which makes the conditions a bit stronger.

8.2 Primal Dual Algorithm for HS

We start with an integral primal and a feasible dual, which are relataed by complementary slackness. In MCNF, we could start with \(x=0\), and \(\pi=0\).

- \(A \gets \emptyset\) i.e. x=0

- \(y_i \gets 0 \:\:\: (i=1,2, \dots, p)\)

- while \(\exists K: T_k \cap A = \emptyset\) (that is, while x is still infeasible)

- (comment) Change x so that it's slightly "less infeasible". (In MCNF, this was pushing flow from excess to deficit).

- (comment) Update y so as to ensure complementary slacknesss. (In MCNF, π ← π-d).

- Increase \(y_k\) until \(\exists e: \sum_{i,e\in T_i} y_i = c_e\).

- \(A \gets A \cup \{e\}\) (which means \(x_e=1\)

8.3 Example

We have a graph with nodes \(a,b,c,d\), which have costs \(2,3,4,5\) respectively. We have edges \((a,b), (a,c), (a,d), (b,c), (c,d)\). It's a rectangle with a diagonal. The \(y_i\) values exist for each edge, and they are all initialized to 0.

We start with the \(T_k\) associated with the edge \((a,c)\). We increase \(y_k\) (you might say instead \(y_{ac}\)) to 2, because this is the first value that will make a vertex's CS condition switch. This causes vertex $a$'s condition to be true, so we can add \(a\) to the cover.

Next iteration, we see that there are still two edges (\((b,c)\) and \((c,d)\)). that haven't been covered yet. We wil choose \(T_k\) associated with \((b,c)\). When we increase \(y_{bc}\), the first value that makes a constraint tight is 2, making the constraint on \(c\) tight (since it already has 2 in its sum, and its cost is 4). So, we add \(c\) to the cover, which covers all the edges and terminates the algorithm.

8.4 Approximation

Theorem: Primal Dual is a max \(|T_i|\)-apx algorithm for HS.

Corollary: PD is a 2-apx algorithm for VC.

Proof of Theorem: The \(y_i\) values start at 0 and never decrease, and are always feasible (since ∑ yi < ce\). \(e\) is added to \(A\) when \(\sum_{i:e\in T_i} y_i = c_e\).

\begin{align*} c(A) &= \sum_{e \in A} c_e = \sum_{e \in A} \sum_{i:e\in T_i} y_i \\ &= \sum_{i=1}^p y_i |A \cap T_i | \\ &\le \max_{1 \le i \le p} |T_i | \cdot \sum_{i=1}^p y_i \\ &\le \max_{1 \le i \le p} |T_{i}| \cdot c(y^*) \\ &\le \max_{1 \le i \le p} |T_{i}| \cdot c(x^*) \\ &\le \max_{1 \le i \le p} |T_{i}| \cdot c(x_{int}^*) \\ \end{align*}8.5 Randomized Approximation Algorithm

LP:

- \(\min \sum_{e \in E} c_e x_e\), s.t.

- \(\sum_{e \in T_i} x_e \ge 1\)

- \(0 \le x_e \le 1\)

Randomized algorithm idea v0.1:

- Solve the linear relaxation above. This gets you an x.

- Add \(e\) to \(A\) with probability \(x_e\).

Sadly, the glaringly obvious issue here is that in general, a solution generated by this algorithm is not guaranteed to be feasible. But how would it perform? Well, it turns out that \(E[c(A)] = c(x^*) \le c(x_{int}^*)\). Which is really good. Like, potentially better than the actual solution to the ILP. Which seems problematic :P

The problem that needs to be solved is making this algorithm feasible. And the approach will be selecting a number \(t\) of trials where we will choose whether to add \(e\) to \(A\). This will allow us to reduce the probability of infeasibility, (which sadly increases the expected cost, but ya gotta do what you gotta do). There will never be a point where the probability of infeasibility of 0.

9 2015-11-11 Wednesday

9.1 Game Tree Evaluation (cont)

Same problem setup - binary tree, alternating min/max, and 0/1 leaves. Recall from last time:

- Not proven, but intuitive: for any deterministic algorithm, I can find a labelling that will force the algorithm to visit every single leaf node.

- We viewed this as a game situation where the algorithm and the input were adversaries. In this view, a randomized algorithm could be viewed as a mixed strategy as opposed to any single deterministic (i.e. pure) strategy.

- Finally, we were attempting to quantify the expected worst case number of leaves visited, by defining it recursively. We're looking at a tree with "min" at the top of each round.

Expected visits for the max layer:

- If max evaluates to 0: then E[# leaves] = \(2W(k-1)\).

- If max evaluates to 1: then E[# leaves] \(\le \frac{3}{2} W(k-1)\).

Now we need to try to quantify \(W(k)\), so we look at the min layer (above the max layer).

- If min evaluates to 0: then at least one child max evaluates to 0.

- With at least 0.5 probability, the algorithm finds it:

- \(W(k) \le \frac{1}{2}[2(W(k-1))] + \frac{1}{2}[2W(k-1) + \frac{3}{2}W(k-1)]\)

- This is \(< W(k-1)\)

- If min evaluates to 1: then we know both children evaluated to 1.

- \(W(k) \le 2 * \frac{3}{2} W(k-1) = 3 W(k-1)\)

In all cases, \(W(k) \le 3W(k-1)\le 3^k = 3^{\log_4 n} = n^{\log_4 3} = n^{0.793} = o(n)\). So by randomizing, we have improved from linear in the number of leaves, to sub linear. Yay us.

Now, we would like to show that there is not a better randomized algorithm for this problem. To do this, we will use Yao's principle:

\begin{equation} \min_y \max_i H(i,y) \ge \min_j H(x,j) \forall x \end{equation}In our case, \(H(i,y)\) is the expected number of leaves visited by randomized algorithm \(y\) on input tree \(i\). Similarly, \(H(x,j)\) is the expected number of leaves visited by deterministic algorithm \(j\) on a tree labelled by probability distribution \(x\).

What Yao's principle gives us here is that our best randomized algorithm has a lower bound determined by the minimum number of leaves expected to be visited by a deterministic algorithm on any input probability distribution \(x\). Interesting.

We will label a leaf 1 with probability \(p\) and 0 with probability \(1-p\). Doesn't matter what \(p\) is. In this case, we have \(p=\frac{3-\sqrt{5}}{2}\)

Also, instead of doing mins and maxes, since we're guaranteed to have 0's and 1's, we know that max nodes are like or's, and min nodes are like ands. If we replace all nodes with nor's, we get an equivalent tree (viewed from the top). This is because \(\lnot(\lnot(a \lor b) \lor \lnot (c \lor d)) = (a \lor b) \land (c \lor d)\). Let's look at the truth table of a nor:

| a | b | \(\lnot(a \lor b)\) | probability |

| 0 | 0 | 1 | \(1-p)^2 = p\) |

| 0 | 1 | 0 | \(1-(1-p)^2\ = 1-p\) |

| 1 | 0 | 0 | same |

| 1 | 1 | 0 | same |

Since Dr. Liberatore cleverly solved a quadratic equation, we have a nice property that each node in the whole tree is 1/0 with the exact same probability distribution: \(p\) and \(1-p\). Woah.

Ok, so now we're looking at a tree of depth two, min at the top, max at the next layer, leaves labeled left to right a,b,c,d. Let a(b,c,d) be the first (second, third, fourth) grandchild(ren) visited by a deterministic algorithm.

Let's look at cases:

| Case | \(W(k)\) | Pr |

|---|---|---|

| \(a=0 \to b=0\) | \(2W(k-1)\) | \((1-p)^2 = p\) |

| \(a=0 \to b=1 \to c=0 \to d=?\) | \(4W(k-1)\) | \((1-p^2)p = p^2\) |

| \(a=0 \to b=1 \to c=1\) | \(3W(k-1)\) | \(p^2(1-p)\) |

| \(a=1 \to c=0 \to d=?\) | \(3W(k-1)\) | \(p(1-p)\) |

| \(a=1 \to c=1\) | \(2W(k-1)\) | \(p(1-p)\) |

To find the expected value of \(W(k)\), we can just sum up each case times its probability, plug it into your favorite numerical/symbolic computing system, and get back an answer. Or, we could be lazy, and try to avoid that. Guess what we're going to do? Be lazy!

We know that the final recurrence will be of the form \(W(k) = c W(k-1)\). "Your book is fearless, and actually calculated the value of \(c\)." - Dr. Liberatore.

However, we see that 3 is sort of the "mean" value, and the probability of 2 is greater than the probability of 4. So we know that \(c<3\), but more than two. If you get the exact value of \(c\) as the book did, you would find that \(W(k) = \Omega(n^{0.694})\). The exponent is \(\log_4 c\), \(c \approx 2.62\). Our estimation was right on.

This tells us that a randomized algorithm needs to examine at least that many nodes. Our randomized algorithm examines more, and it's an open problem to try to close the gap.

10 2015-11-09 Monday

10.1 Game Tree Evaluation

(continued)

Recall:

- We have a game tree, with internal nodes labeled "min" and "max" alternating layers. The leaves have payoff values of either 0 or 1.

- Some definitions:

- \(n\): number of leaves (\(=4^K\))

- \(k\): number of rounds

- \(h\): height (\(=2k\))

- Input: \(k\), labeling of leaves.

- We were doing a "game theoretic" approach to this problem, where:

- One player tries to minimize the number of node visits by designing a smarter algorithm.

- The other player tries to maximize the number of node visits by designing a devious tree.

- We realized that really any deterministic algorithm will have an input that will force it to visit every node.

- We realized that a randomized algorithm was equivalent to a "mixed" strategy, and it may present a better expected runtime

10.1.1 Pruned Depth First Search

This is essentially a depth first search where we avoid expanding branches if we already know the parent's value. Without loss of generality, any algorithm we design will be of this form. That is (according to the book): "for any algorithm that examines \(L\) leaves, there exists a pruned DFS that examines no more than \(L\) leaves." So it's a bit simpler to think of everything in pruned DFS terms anyway.

10.1.2 Game Theory Approach to Game Tree Evaluation

Question: Here's a deterministic algorithm. Show that it examines all leaves.

Answer: A labeling that forces the examination of all leaves.

We have two players:

- The Input (worst-case). Objective: maximize \(n\).

- Strategies: \(S^1 = \{(0,0,0,0), \dots, (1,1,1,1)\}\)

- Some are not useful at all (like the first and last)

- So strictly less than 16 (or 15) strategies in the case of \(k=1\).

- The Algorithm: Objective: minimize \(n\)

- Strategies: \(S^1 = \{(L,L,L), \dots, (R,R,R)\}\).

- Each is an assignment of the "first" direction to each internal node.

- These are all unique and potentially useful strategies, so exactly 8.

10.1.3 Randomized Algorithms

RA's base their decisions on a random number generator.

Examples:

- for Game Tree Evaluation, choose a strategy in S2 uniformly at random, and use it.

- always use the first strategy (an RA doesn't have to use its RNG).

- choose a strategy in S2 at random, with any probability distribution over S2.

This already feels quite similar to mixed strategies!

Note: random ≠ arbitrary. In depth first search, we arbitrarily select an outgoing edge to expand. That doesn't mean we're doing it at random. An arbitrary selection is just a decision made ahead of time, and a random with arbitrary decisions will always run the same, and may have a worst case input.

Anyway, now that we're looking at this problem as a game, we should also note that the number of strategies of either player is exploding exponentially as a function of the number of rounds. So we can't expect to be able to use a linear program to come up with a maximized worst case mixed strategy. But we can still use game theory stuff (such as Yao's principle) to help.

10.1.4 Worst Case

Ok, our previous initial randomized algorithm was to select a strategy from \(S^1\) uniformly at random. This is equivalent to, when visiting an internal node, go L/R with probability 0.5.

Let \(W(k)\) be the worst case number of leaves examined by randomized algorithm in the expectation (\(k\): number of rounds).

- Let's look at a "max" node, which will finally evaluate to 0. We know that both nodes must have been evaluated, so \(W(k) = 2 W(k-1)\).

- Now let's look at a "max" node, which will finally evaluate to 1. This means that at least one of the children will evaluate to 1. With at least 0.5 probability, we will find it, and have \(W(k) = W(k-1)\), and with no more than 0.5 probability, we won't find it, and get \(W(k) = 2W(k-1)\). So, the expected worse case is \(W(k) \le \frac{3}{2} W(k-1)\).

11 2015-11-06 Friday

11.1 Two Person Zero Sum Games (cont)

Last time, we defined TPZSGs, and saddle points, and we talked about mixed strategies. We also formulated the problem of designing a mixed strategy to maximize a player's worst case payoff:

\begin{align*} \max v, &\text{ s.t.} \\ \sum_{i=1}^n H(i,j) x_i &\ge v \:\:\: j=1,2,\dots,m \\ \sum_{i=1}^n x_i &= 1 \\ x_i &\ge 0 \:\:\: i=1,2,\dots,n \end{align*}The dual:

\begin{align*} \min w, &\text{ s.t.} \\ \sum_{j=1}^m H(i,j) y_j &\le w \:\:\: i=1,\dots,n\\ \sum_{i=1}^m y_i &= 1 \\ y_i &\ge 0 \:\:\: j=1,\dots,m\\ \end{align*}Would you look at that! The dual is the exact same thing except for the other player. An due to duality, we know that \(v^* = w^*\), so they will result in the same value. This is Loomis's Lemma (this guy Loomis proved it before we knew about LP duality, so good for him).

We can take the constraints of the dual and sum them up like this to get a new expression (for any \(x\)).

\begin{align*} \sum_{i=1}^n x_i \sum_{j=1}^m H(i,j) y_j^* &\le \sum_{i=1}^n x_i w^* \\ \sum_{i=1}^n \sum_{j=1}^m x_i H(i,j) y_j^* &\le w^* \end{align*}And similarly for the primal:

\begin{align*} \sum_{j=1}^m \sum_{i=1}^n x_i^* H(i,j) y_j \ge v^* \end{align*}Combining these (and substituting in \(x^*\) and \(y^*\) respectively, we have:

\begin{equation} v^* \le \sum_{i=1}^n \sum_{j=1}^m x_i^* H(i,j) y_j^* \le w^* \end{equation}By definition, that quantity in the summation is \(H(x^*, y^*)\), and by duality, we know that \(H(x^*, y^*) = v^* = w^*\). This leads us to the inequality:

\begin{equation} H(x,y^*) \le H(x^*, y^*) \le H(x^*,y) \end{equation}This leads to the theorem (Von Neuman): Every 2 person zero sum game has a (mixed) saddle point.

11.1.1 Yao's Principle

This is a restatement of Loomis's lemma, with a rather obvious addendum. And then we put this into two inequalities that look a bit less obvious.

\begin{align*} \max_x \min_j H(x,j) &\le \max_i H(i,y) \forall y\\ \min_y \max_i H(i,j) &\ge \min_j H(x,j) \forall x \end{align*}And I guess that is Yao's Principle. It is useful for when you want to put an upper bound on the \(v^*\) the optimal payoff. You create a \(y\) that has \(\max_i H(i,y) \le b\).

11.1.2 Example Time

Game Tree Evaluation:

- Input: game tree. The game tree root is a maximizing node, its children are minimizing, and the next level are maximizing, etc. The leaves are labeled with 0 or 1. The tree has degree \(d\), and \(k\) rounds (which means that the height of the tree is \(2k\)). In our example, \(d=2\).

- Output: \(x\), the value at the root node.

We would like an algorithm to evaluate this tree, and we would ilke it to minimize the number of leaves visited (which is roughly equivalent to minimizing runtime).

The total number of leaves in this tree is \(4^k\).

Depending on the tree, there could be a node that will allow you to skip visiting other nodes. But with a deterministic algorithm, you can always design a worst-case input that will "hide" that information for as long as possible.

This already is similar to game theory stuff. We are one player (trying to find a worst-case input), and our algorithm is the other player (trying to terminate as quickly as possible).

12 2015-11-04 Wednesday

12.1 Two Person Zero Sum Games

Recall: a TPZSG is a game with 2 players, and the payoff of player 1 is the opposite of the payoff of player 2. We will define \(H(i,j)\) to be the payoff for player 1 of strategies \(i\), \(j\).

Definition: A saddle point is strategies \(i^*\) (first player), \(j^*\) (second player) s.t.:

- \(\forall i \in S^1\), \(\forall j \in S^2\): \(H(i, j^*) \le H(i^*, j^*) \le H(i^*, j)\)

That is, when player 1 knows player 2 is using \(j^*\), no strategy will do better than \(i^*\). And vice versa.

Example:

| 1: | 2: | 3: | |

| 1: | 3 | 4 | 3 |

| 2: | 2 | 0 | 1 |

Saddle point: \(i^* = 1\), \(j^* = 1\), with a payoff of 3.

There is not always a saddle point!

Assume ∃ saddle point \((i^*, j^*)\):

\(\forall \min_j H(i,j) \le H(i,j^*) \le H(i^*,j^*)\)

\(\max_i \min_j H(i,j) \le H(i^*, j^*) \le \min_j \max_i H(i,j)\)

When there is a saddle point, these inequalities are actually strict inequalities! Since you would simply choose \(i^*\) and \(j^*\).

12.2 Saddle Points for Mixed Strategies

12.3 Something Else

From the point of view of player 1, I expect the worst (i.e. minimizing my playoff) pure strategy from player 2. I would like to formulate a mixed strategy to play the game that maximizes my (expected) "profit". Let \(x_1, x_2, \dots, x_n\) be probabilities of strategy \(i \in S^1\). If player 2 plays \(j\) (I don't know that he will!):

\(H(x^*, j) = \sum_{i=1}^n x_i H(i,j)\)

My worst-case payoff for any strategy that player 2 can play is:

\(\min_j H(x^*, j) = \min_j \sum_{i=1} x_i H(i,j)\)

I would like to maximize my own worst-case payoff (since I know player 2 will try to minimize my payoff). That is:

\(x^* = \arg \max_x \min_j \sum_{i=1}^n x_i H(i,j)\)

How do we find this? Linear programming. We create a constraint for every strategy \(j\) can have: \(\sum_{i=1}^n x_i H(i,j) \ge v\). We want to maximize \(v\), our minimum payoff. We also need to ensure that \(\sum_{i=1}^n x_i = 1\)., and \(x_i \ge 0 \forall i\). The overall LP:

- \(\max v\), s.t.

- \(-\sum_{i=1}^n x_i H(i,j) + v \le 0 \:\: \forall j\)

- \(\sum_{i=1}^n x_i = 1\)

- \(x_i \ge 0 \:\: \forall i\)

Dual:

- \(\min w\), s.t.

- \(-\sum_{j=1}^m H(i,j) y_j + w \ge 0\)

- \(\sum_{i=1}^m y_i = 1\)

- \(y_j \ge 0\)

13 2015-11-02 Monday

13.1 Dr. Liberatore's Homework

Last time we talked about games in Behavioral (Extensive?) form, and translating them into Strategic form. We are still looking at the game tree where Player 1 has choices L and R, and then Player 2 chooses L or R after that.

- \(S^1 = \{L, R\}\)

- \(S^2 = \{(L,L), (L,R), (R,L), (R,R)\}\)

- where (x,y) means: if player 1 played L, then x. If player 1 played R, then y. (I think I missed this part last lecture!)

The probability you assign to \(S^2\) is the product of the conditional probabilities of each move. So say player 2 will move left with probability \(1/2\) when player 1 moves left, and left with probability \(3/4\) if player 1 goes right. In this case, the probability of strategy \(s^2=(L,L)\) is \(\frac{3}{8}\).

Computing the probability of each of the payoffs can be done with this setup, you just have to make sure that you add up probabilities for both strategies that allow a certain branch to take.

Given a game in extensive form:

- Wlog the payoff leaves are distinct (if not, add a player who never gets to move and whose payoff is different from leaf to leaf).

\(Pr[H(l)]\). If you have a path from the root to a payoff, then the probability is:

\begin{equation} Pr[H(l)] = \prod_{e \in \text{Path root} \to l} p(e) \end{equation}Here, \(p(e)\) is the probability that edge \(e\) is chosen. We can subdivide this into terms for each player:

\begin{equation} Pr[H(l)] = \prod_{e \in \text{Path root} \to l} p(e) = \prod_{i=1}^n \prod_{e \in \text{Path root} \to l, e \text{chosen by player } i} p(e) \end{equation}When you transform the game to strategic form, a strategy set will be the set of all possible choices for all the players. To compute the probability of getting to a certain payoff, you do the following:

\begin{equation} Pr[H(l)] = \prod_{i=1}^n Pr[\text{player }i\text{ contemplates }e_1, e_2, \dots] \end{equation}Which evaluates to the same probability.

13.2 Our Homework

13.2.1 MCNF and Max Flow

Can't have negative upper bounds! For the nurse problem, you had to maximize the number of unused nurses, not try to maximize the negative of the number of nurses used.

13.2.2 Successive Shortest Paths

Negative reduced costs should not happen, they indicate an error in your work. That's fine if you encounter that and recognize it, but it sounds like a lot of people got negative reduced costs.

Each iteration, you have \((c_{ij}^\pi, r_{ij})\) for each arc, and \((e_i, \pi_i)\) for each node \(i\).

- You compute the shortest path using the reduced costs. This assigns \(d_i\) values.

- You determine the shortest path and augment flow.

- You draw a new network. The $πi$'s are updated by \(\pi_i - d_i\).

- The topology is changed to to the augmentation.

- However, the \(c_{ij}^\pi\) are always recomputed with \(c_{ij}^\pi = c_{ij} - \pi_i + \pi_j\), not using the old \(c_{ij}^\pi\)

14 2015-10-30 Friday

14.1 Games (strategic form)

14.2 Mixed Strategies

- Mixed strategy for \(i\)th player:

- \(x^i\): probability distribution over \(S^i\).

- Mixed strategy profile:

- \(\vec{x} = (x^1, x^2, \dots, x^n)\)

- Strategy profile \(\vec{s} = (s_1, s_2, \dots, s_n)\) has probability \(\vec{x}(\vec{s}) = x^1(s_1)x^2(s_2) \dots x^n(s_n)\).

- Expected payoff:

- \(H(\vec{x}) = \sum_{s\in S^1 \times S^2 \times \dots \times S^N} \vec{x}(\vec{s}) H(\vec{s})\)

14.3 Games (Behavioral Form)

There is a tree that shows what the players can do. Say, the first level is player one, and there is a branch for each move player one can do. Then, the next level is for the next player, and at each "child node" game state, there is a branch for each move the player can make.

At the leaves of the tree you have the payoff function.

- The behavioral form is probably a bit more intuitive. It represents players' moves as sequential, whereas the strategic form doesn't impose that.

- The book has a proof that the behavioral form and strategic form are equivalent. But we won't be doing much with behavioral form, so we'll just do an example of one.

Our example of behavioral form was a binary tree with two levels, where player one would first choose between left and right, and then player two would choose between left and right as well.

We had a bit of a disagreement on how to represent player two's set of pure strategies, and its probability distribution. Dr. Liberatore represented \(S^2 = \{LL, LR, RL, RR\}\), and created a probability distribution over that. I disagree; I think that you should represent \(S^2=\{L, R\}\), and that \(x^2\) is just conditioned on \(x^1\). But, we spent a lot of time on this dispute without getting anything done, so we moved on.

14.4 Two person zero sum game

- \(n=2\)

- \(H_1(\vec{s}) = -H_2(\vec{s})\)

This means that the first player's maximization is the second player's min.

- There is/(may be) a saddle point \((i^*, j^*) \in S^1 \times S^2\).

- \(H(i,j^*) \le H(i^*,j^*) \le H(i^*,j)\), \(\forall i \in S^1, j \in S^2\)

- If player two plays \(j^*\), then player 1 wlog plays \(i^*\).

- If player one plays \(i^*\), its payoff is guaranteed to be \(\ge H(i^*, j^*)\). It could be more if player two makes a mistake.

- There doesn't have to be a saddle point, I guess. For instance, the matching pennies game doesn't have a saddle point.

- Andrew's musings: A saddle point is a [https://en.wikipedia.org/wiki/Nash_equilibrium[Nash equilibrium]]. For pure strategies, there does not have to be a saddle point, but if you allow mixed strategies, then for every two-player, zero sum game, there is always a saddle point (from ECON 329).

15 2015-10-28 Wednesday

15.1 Capacity Scaling (cont)

The algorithm:

- First, set a flow \(x \gets 0\), \(\pi \gets 0\)

- \(\Delta \gets 2^{\lfloor\log U\rfloor}\)

- while \(\Delta \ge 1\)

- Here, we maintain the invariant:

- foreach \((i,j) \in E\):

- if \(c_{ij}^\pi < 0\), then arc reversal

- SSP(\(\Delta\))

- \(\Delta \gets \Delta / 2\)

- The algorithm holds the loop invariant that \(c_{ij}^\pi \ge 0 \:\:\: \forall (i,j) \in E(x,\Delta)\).

- SSP(\(\Delta\)) terminates when either \(E(\Delta) = \emptyset\) or \(D(\Delta) = \emptyset\). So either \(0 \le e_i < \Delta\) or \(0 \ge e_i > -\Delta\). This means that the total excess ≤ \(n\Delta\).

- After we modify \(\Delta\), the total excess ≤ \(2n\Delta\).

- However, the arc reversals introduce a worst case total of \(2m\Delta\) excess, so at the beginning of SSP, we get a total excess ≤ \(2(n+m)\Delta\).

- At \(\Delta=1\), \(G(x,\Delta) = G(x)\), so we are solving the original residuals.

- We can think of CS as a logarithmic number of scaling phases to prepare for \(SSP(1)\) (the original problem).

- Kinda like Shell Sort!

15.1.1 Runtime

The original SSP took \(O(nUS)\). It was based on the idea that you would send (in the worst case) 1 unit of flow each time, so you would do \(nU\) augmentations, multiplied by \(S\), the shortest path time. We have changed our SSP algorithm so that it pushes \(\Delta\) units of flow at each iteration. We said above that at the beginning of SSP, the total excess ≤ \(2(n+m)\Delta\). By \(2(n+m)\) iterations, we should have excess set to 0. So, the runtime is \(O((n+m)S)\) for each SSP, and the total runtime is \(O((n+m)S\log U)\). (for the record, \(m\) is the number of edges). This means that capacity scaling is in fact a polynomial time algorithm!

15.2 Max Flow Min Cut

Suppose you have a source vertex, and a network without costs but with capacities. The maximum flow from \(s\) to \(t\) is equal to the min cut that separates \(s\), \(t\). A cut is defined as a partition of the set of vertices. The min cut is the one that minimizes the value of the edges (in this case, capacities) that span the partition. It actually really makes sense that max flow is equal to min cut, because the min cut is like the smallest "pipe width" you can send flow through.

Unfortunately, in order to solve min cut you pretty much have to solve max flow. So there isn't a huge breakthrough due to this property. It's just interesting and sometimes useful.

15.3 Game Theory!

We have a game where a professor puts a coin under a cup, and a student guesses whether it's heads or tails. If the student guesses correctly, they get a point and the professor loses one. Otherwise, the professor gets a point and the student loses one. To summarize:

| Student Guesses Heads | Student Guesses Tails | |

| Professor Picks Heads | Professor-1, Student+1 | Professor+1, Student-1 |

| Professor Picks Tails | Professor+1, Student-1 | Professor-1, Student-1 |

Here is a theoretical definition of a game (in strategic terms):

- Set \(N=\{1,2,\dots,n\}\) players.

- Set \(S^i \) of pure strategies for the \(i^{\text{th}}\) player.

- Payoff function \(H: S^1 \times S^2 \times \dots \times S^n \to R^n \)

- A strategy profile: \((s_1, s_2, \dots, s_n)\)

- \(H_i(s_1, s_2, \dots, s_n)\) is the payoff for player \(i\)

In this coin flipping game, there are two players (player one is professor, player two is student), and \(S^1=S^2=\{h,t\}\). The table above summarizes the payoff, but I'll do it again in terms of the players and strategies again.

| s2=h | s2=t | |

| s1 = h | (-1, +1) | (+1,-1) |

| s2 = t | (+1, -1) | (-1,+1) |

Def: A mixed strategy of player \(i\) is a probability distribution over \(S^i \).

- \(\vec{x}\): mixed strategy profile

- \(\vec{x} = (x_1, x_2, \dots, x_n)\)

- \(Pr[s] = x_1(s_1)x_2(s_2)\dots x_n(s_n)\)

16 2015-10-26 Monday

16.1 Runtime of Successive Shortest Paths

- Worst case complexity, as a function of \(n\), is sometimes tricky.

- We talk about it in terms of "input size", with any reasonably efficient encoding.

- Some things that look polynomial (e.g. \(n^k \)) are not, because \(k\) is an input to the problem.

- Some things that don't look polynomial (e.g. \(n \log n\)) are, because they are bounded above by a polynomial function (e.g. \(n^2 \)).

- So, with that in mind, let's look at the runtime of SSP.

The runtime is \(O(n \times U \times S)\), where:

- \(n\) is the number of vertices

- \(U = \max_{i\in V} \{|b_i |\}\)

- \(S\) is the shortest path runtime

This is not polynomial! Let's look at the terms. We'll assume \(S\) is fine. To represent the graph, we need at least \(n\) bits (probably some constant multiple of \(n\), for the edges and things). So, \(n\) is an input size, and the \(n\) term is polynomial.

However, \(U\) is not an input size. It's an input number. It's completely unrelated to the storage of the graph and the input size. You could make it whatever you'd like. Because of this, you only need \(\log_2 U\) bits to store it. Thus, \(U\) is exponential in the input size. (A useful counterexample - if \(U=2^n \), then it's \(O(n2^n S)\)).

It's a pseudo polynomial time algorithm. If we represented \(U\) in unary, it would be polynomial. But that's not a reasonably efficient encoding.

16.2 Capacity Scaling

- \(\Delta = 2^{\lfloor\log U\rfloor},\dots, 2, 1\)

- \(E(\Delta) = \{e_i \ge \Delta: i \in V\}\)

- \(D(\Delta) = \{e_i \le -\Delta: i \in V\}\)

- \(G(x,\Delta) = (V, E(x,\Delta))\) - the residual network w.r.t. \(x\), w/ edges \(r_{ij} \ge \Delta\).

The idea of this algorithm is that we want to solve the biggest supplies and demands first.

SSP(Δ):

- Input: \(G(x, \Delta)\) s.t. \(c_{ij}^\pi \ge 0 \:\:\: \forall (i,j) \in E(x,\Delta)\)

- \(E(\Delta) \gets \{e_i \ge \Delta: i \in V\}\)

- \(D(\Delta) \gets \{e_i \le -\Delta: i \in V\}\)

- while \(E(\Delta), D(\Delta) \ne \emptyset\):

- choose \(k \in E(\Delta)\), \(l \in D(\Delta)\)

- find shortest path \(P: k \to l\) in \(G(x, \Delta)\) w/ \(c_{ij}^\pi \)

- send \(\Delta\) units of flow along \(P\).

- \(\pi \gets \pi - d\)

- update \(x\), \(E(\Delta)\), \(D(\Delta)\), \(G(k,\Delta)\).

Thinking about this algorithm, we see that each iteration of this will not necessarily remove an edge from the residual. It could just drop that node out of \(E(x,\Delta)\), while still keeping its residual greater than 0. So, we need the capacity scaling algorithm below:

Capacity Scaling:

- \(x \gets 0, \pi \gets 0\)

- \(\Delta \gets 2^{\lfloor\log U\rfloor}\)

- while Δ ≥ 1:

- for each \((i,j) \in G(x)\):

- if \(c_{ij}^\pi < 0\) then arc reversal

- SSP(\(\Delta\))

- \(\Delta \gets \Delta / 2\)

- for each \((i,j) \in G(x)\):

- Claim: \(c_{ij}^\pi \ge 0 \:\:\: \forall (i,j) \in E(x,\Delta)\) throughout SSP(\(\Delta\)).

- Proof: similar to SSP but \(G(x, \Delta)\) …

- Sketch: if we have an edge that is in \(E(x, \Delta)\) but not \(E(x, 2\Delta)\), we cannot guarantee the claim. But an arc reversal will fix this…

Runtime: \(O(n \times \log U \times S)\)

- How do we get that? Let's look at the total excess right after we adjust

\(\Delta\). Due to the termination condition of SSP, we have:

- either \(\forall i \in V: 0 < e_i < 2\Delta\),

- or \(\forall i \in V: 0 > e_i > -2\Delta\).

- These imply:

- either: total excess \(\le 2n\Delta\)

- or: total deficit \(\ge -2n\Delta\)

17 2015-10-21 Wednesday

17.1 MCNF Algorithms

Assumptions

- All data is integral (this implies that solutions are integral as well).

- An "integral part" of why the solutions are all integral.

- \(c_{ij} \ge 0 \:\:\: \forall (i,j) \in E\)

- If you have a path that contains cycles, and all weights are non-negative, you're better off eliminating all cycles.

- \(\forall u,v \in V \:\:\: \exists P: u \to v\) (that is, there is a path from every node

to every other one).

- Let \(|P| \le n - 1\). Since \(c_{ij} \ge 0\):

- cost of \(P \le n C = n \max_{(i,j)\in E} \{c_{ij}\}\)

- I missed the lead up to this, but: "An optimal solution should not send flow through \(z\) if \(\exists\) another path from \(u\) to \(v\)."

17.1.1 Successive Shortest Paths

Imagine that you have a number line of the objective value. You take a feasible flow (?), then take its dual and start improving the dual until it's feasible, and then it's optimal (?).

Definition: pseudo-flow \(x\) satisfies \(u_{ij}\) but not necessarily \(b_i\).

- EG: \(x=0\). This satisfies the upper bounds, but usually not the supplies and demands.

- We can also let \(\pi=0\) in the dual. This means that the reduced costs \(c_{ij}^\pi = c_{ij} - \pi_i + \pi_j \ge 0\), and the residual is the original network. This means that \(x=0\) and \(\pi=0\) are related by complementary slackness. So this is a starting point for SSP.

Definition: \(e_i \): excess at vertex \(i\) w.r.t. pseudo flow \(x\):

\begin{equation} e_i = b_i + \sum_{(j,i) \in E} x_{ji} - \sum_{(i,j) \in E} x_{ij} \end{equation}Initially, (at \(x=0\)), \(e_i = b_i\).

Then, we define the:

- Excess vertices: \(E = \{i \in V: e_i > 0\}\)

- Deficit vertices: \(D = \{i \in V: e_i < 0\}\)

Assuming we have a feasible solution, \(E \ne \emptyset \leftrightarrow D \ne \emptyset\) (initially?).

So, if you have two vertices, one in \(E\) and one in \(D\), you want to send some flow between them. How do we update the \(\pi\) values when you come up with the new \(x\)?

Claim: Let \(x\) be pseudo-flows and \(\pi\) be node potentials, satisfying complementary slackness. We also have \(G(x)=(V, E(x))\), the residual (residuals only use upper bounds and flows, not supplies, so we can have residuals for pseudo-flows). Finally, let \(c_{ij}^\pi \) be the reduced costs for \((i,j) \in E(x)\).

- Define \(d_i\) to be the length of the shortest path from \(s \in V\) to \(i\) (\(\forall i \in V\)) in the residual, with lengths \(c_{ij}^\pi \). (\(s\) is arbitrary but fixed as some special vertex \(s \in V\)). Since \(c_{ij}^\pi \ge 0\), this is well defined.

OK, two results of all these assumptions and notations:

- \(x\), \(\pi'\) satisfy complementary slackness, where \(\pi'=\pi-d\)

- \(c_{ij}^\pi = 0 \:\:\: \forall (i,j) \in\) shortest path tree

Example (initial \(\pi = 0\))

| i | bi | di | π'i |

|---|---|---|---|

| 1 | 4 | 0 | 0 |

| 2 | 0 | 2 | -2 |

| 3 | 0 | 2 | -2 |

| 4 | -4 | 2 | -3 |

| i | j | cij | uij | cij^π | cijπ' |

|---|---|---|---|---|---|

| 1 | 2 | 2 | 4 | 2 | 0 |

| 1 | 3 | 2 | 2 | 2 | 0 |

| 2 | 3 | 1 | 2 | 1 | 1 |

| 2 | 4 | 3 | 3 | 3 | 2 |

| 3 | 4 | 1 | 5 | 1 | 0 |

The shortest path tree is: (1,2), (1,3), (3,4). The (new) reduced costs for those edges are all 0. In this case, they are the only edges that have reduced costs 0, but that's not true in the general sense.

Proof: (I doubt we'll have time to finish this bad boy)

- Proof of the first part (\(x\), \(\pi'\) satisfy complementary slackness):

\(d_j \le d_i + c_{ij}^\pi \:\:\: \forall (i,j) \in E(x)\) (I believe this is a Bellman-Ford inequality)

\begin{align*} c_{ij}^{\pi'} &= c_{ij} - \pi_{i}' + \pi_{j}' \\ &= c_{ij} - \pi_i + d_i + \pi_j - d_j \\ &= c_{ij}^\pi + d_i - d_j \ge 0 \end{align*}Well, that was neat.

- Proof of second part \((i,j) \in SPT\), \(c_{ij}^\pi = 0\)

- If \((i,j) \in SPT\), then…

- We have $dj = di + cij^π

- And therefore when you do the computation above, you end up with \(c_{ij}^{\pi'} = \dots = c_ij^\pi + d_i - d_j = 0\). So that was also easier than expected.

Now we're talking about CS:

- \(\alpha_{ij} (u_{ij} - x_{ij}) = 0\)

- \(x_{ij} (c_{ij}^\pi + \alpha_{ij}) = 0\)

When you have \(c_{ij}^\pi = 0\), \(\alpha_{ij} = 0\), and so both CS conditions are satisfied (for any flow \(x\)).

You can send any amount of flow along this SPT, and it will be optimal! Our job is just to find a flow that satisfies the upper bounds.

18 2015-10-16 Friday

Midterm was today.

19 2015-10-14 Wednesday

Update: Problem 5 on the homework we went over on Monday did not actually have a solution that involved shortest paths. Oops!

Friday is the midterm. (I'm a bit scared). So I guess we'll do some review.

- The "Reading List" syllabus on Blackboard contains all the topics.

- There will be four questions.

- Easier than the homework (but very similar)

- Doubtful that we will have enough time to solve them all. We won't be graded out of 100% completion.

- We should have enough time to "get a start" on them all and "write something meaningful".

- Doubtful that we will need to write anything in Octave.

- Open books, open notes, open electronics (w/o internet). Of course, this isn't the most helpful to you if you bring everything, because you won't have time to use it in 50 minutes.

19.1 Back to Equipment Replacement

- \(c_{ij}\): cost of operating machine from year \(i\) to year \(j\). \(1 \le i < j \le n\).

- Minimize the total cost s.t.

- At every time, the number of running machines ≥ 1.

- Last time, we got to the point where we had a graph with nodes 1 through \(n\). Each node had an arc to all the future years, with the \(c_{ij}\)'s labeled on it.

- Put a supply of 1 on 1, and a supply of -1 on n. This seems like it would

solve the problem, but it doesn't allow for us to have multiple machines

operating at one time.

- One solution would be to have a single "source" node that connects forward and backward with each year node. But this allows us to send machines "forward" in time with zero cost. Bad.

- My initial solution to that was to have a set of nodes 1' through n', with edges (i',1) through (i', i-1), and then (i, i'). This allows only backward flow. This does solve the problem, but wih quadratic arcs.

- The next improvement on that was to have each "prime" node connected backward to the previous one, instead of all of the previous regular nodes. This improved it to linear number of arcs.

- Then we realized that each node could just be directly connected to its backward neighbor with cost of zero. Oops.

19.2 Back to Uniform Cost Parallel Machines

- \(n\) jobs, length \(l_i \le T\), \(\sum_{i=1}^n l_i \le TM\), where \(T\) is the time unit, \(M\) the number of machines.

- We had a max flow solution to assign job segments into time slots, now we

need to fill up the machines so that there is no overlap.

- To do this: assign the first job into the first machine. Assign the next job to the remainder of the machine's time. Then put that job's remainder onto the next machine. You know it won't overlap because the segment must be ≤ T.

- Yay.

19.3 Midterm Questions

Now we talk about the midterm.

- Mostly "solving problems"

- They will be "like" homework problems. Not necessarily "same question, different numbers". But similar in spirit.

- There could be reductions to linear programs.

- There could be reductions to shortest paths

- Should probably review your linear algebra so it's easier to

- (This is only slightly terrifying)

19.4 Final Exam

- 30-40 minute oral exam!!!

- Will be plenty of talk on concepts, explaining yourself, etc.

- (This is actually a lot terrifying)

20 2015-10-12 Monday

20.1 Homework 3 Review

Problem 1: when converting nodes with capacities into pairs of nodes without capacities, you assign the supplies to the input/output node by the following rule:

- If \(b_i > 0\), then assign it to the output node.

- If \(b_i < 0\), then assign it to the input node.

- Otherwise, it doesn't really matter, both nodes get 0.

Also on problem 1, make sure when you draw graphs you explain what your numbers mean. A good policy is to include a legend.

Finally, it's generally better to get rid of capacities first, because we don't have solid rules on how to get rid of lower bounds with nodes that have capacities.

For my case, I know I forgot to decrease the upper bound of \((c,d)\) when I removed the lower bound. My other error was putting the negative \(b_i\) on the output node.

Problem 4: The strategies for finding \(\pi_i\) were varied. Some wrote the primal, and then found the dual, and then solved it with Octave, and then used the dual solution. Others guessed and checked. Others used the \(c_{ij} - \pi_i + \pi_j \ge 0\) condition and found a feasible solution to that (which was not too hard given the number of parallel arcs). The other way was to pick a starting node from which all other nodes are reachable, and then find the shortest to each node. The negative of these values are suitable to use as node potentials. Admittedly, in my solution, I didn't show any work for the node potentials, but I used the shortest path method.

Problem 5: Here is one solution to a: 2 3 2 3 2. Here, Alice always loses no matter what she chooses. My solution is similar to that (1 6 7 5 8 4 9 3 10 2). This sort of solution may trick you into believing that if Alice has any winning strategy, the best choice is to choose the max chip. This is not true. Consider this arrangement: 10 1000 3 4. Alice should choose 4 in order to protect the 1000, forcing Bob to open it up for her to take on the next turn. This strategy requires that she remove the lower of the choices.

One way to solve part b is to formulate the solution recursively, and come up with a dynamic programming algorithm. Then do as in class and recast the dynamic programming solution as a shortest path solution.

Another way to do it would be to do game trees, where you represent each node as a series of decisions, and its children are the results of all the possible decisions from that node. But this has \(2^n\) nodes, which is pretty bad. There is also the problem that a shortest path solution will minimize the strategy of Bob, which doesn't really do what we want.

Dr. Liberatore's solution is to formulate each node in a graph as a list of chips, and make transitions a full round (Alice, Bob). We identified a similar issue to the previous strategy (where it minimizes Bob's strategy). Hopefully next class we'll get it figured out.

21 2015-10-09 Friday

21.1 Uniform Parallel Machines

You have a set of \(n\) jobs:

- \(s_i\): start time of job \(i\)

- \(d_i\): deadline of job \(i\)

It seems like the idea is to schedule each job to a time interval on a machine so that everything happens. The claim is that this reduces to Max Flow.

| Job | 1 | 2 | 3 | 4 |

| ri | 1.5 | 1.25 | 2.1 | 3.6 |

| Interval | Jobs |

|---|---|

| 1,3 | 2 |

| 3,4 | 1,2,3 |

| 4,5 | 1,3 |

| 5,7 | 3,4 |

| 7,9 | 4 |

We create a network with a source, and a sink. We create nodes for the jobs, and we create nodes for each time interval. We create arcs from the source to each time interval, with capacity of \(M * (f_i - s_i)\). Then we create nodes for each job. We create arcs from the jobs to the sink with capacity of \(r_i \). Finally, we create arcs from the intervals to the jobs, with capacity \(f_i - s_i \).

To go from a flow to a schedule: If we have \(x_{(h,k), i} > 0\), we schedule \(i\) in \([h,k]\) for \(x_{(h,k),i}\) time. We may have to do some finagling to ensure that everything fits, but we'll come back to that issue. In any case, we can also go from a schedule to a flow.

A schedule/flow is feasible if every job → sink link is at capacity.

21.2 Equipment Replacement

When you buy a machine, it operates very nicely at the beginning, but then deteriorates and you need to repair it. You'll need to repair it more and more and more as you go. At some point, it becomes cheaper to replace it with a new machine than to continue repair it.

So, say you buy a machine at time \(i\) and sell at time \(j\).

- \(c_{ij}\): total cost of operating machine from time \(i\) to \(j\). This includes the cost of purchase, minus the sell price, plus repair prices.

At least one machine must be operating at all times. The problem is to find an optimal replacement schedule. The claim is that this reduces to MCNF. We are given a discrete set of times 1 through \(n\) to buy and sell at.

You can create a network with a node for each time. Each time \(i\) is connected to each future node \(j\) with cost \(c_{ij}\). You set the supply at \(1\) to 1, and supply at \(n\) to -1. The one problem is that this doesn't allow for more than one machine to be running at a time. So, we're trying to solve that by adding a source and sink node, but things aren't working out perfectly.

22 2015-10-07 Wednesday

22.1 Max Flow Reduction: Matrix Rounding

A government agency collects data via a census, in the following matrix \(A\):

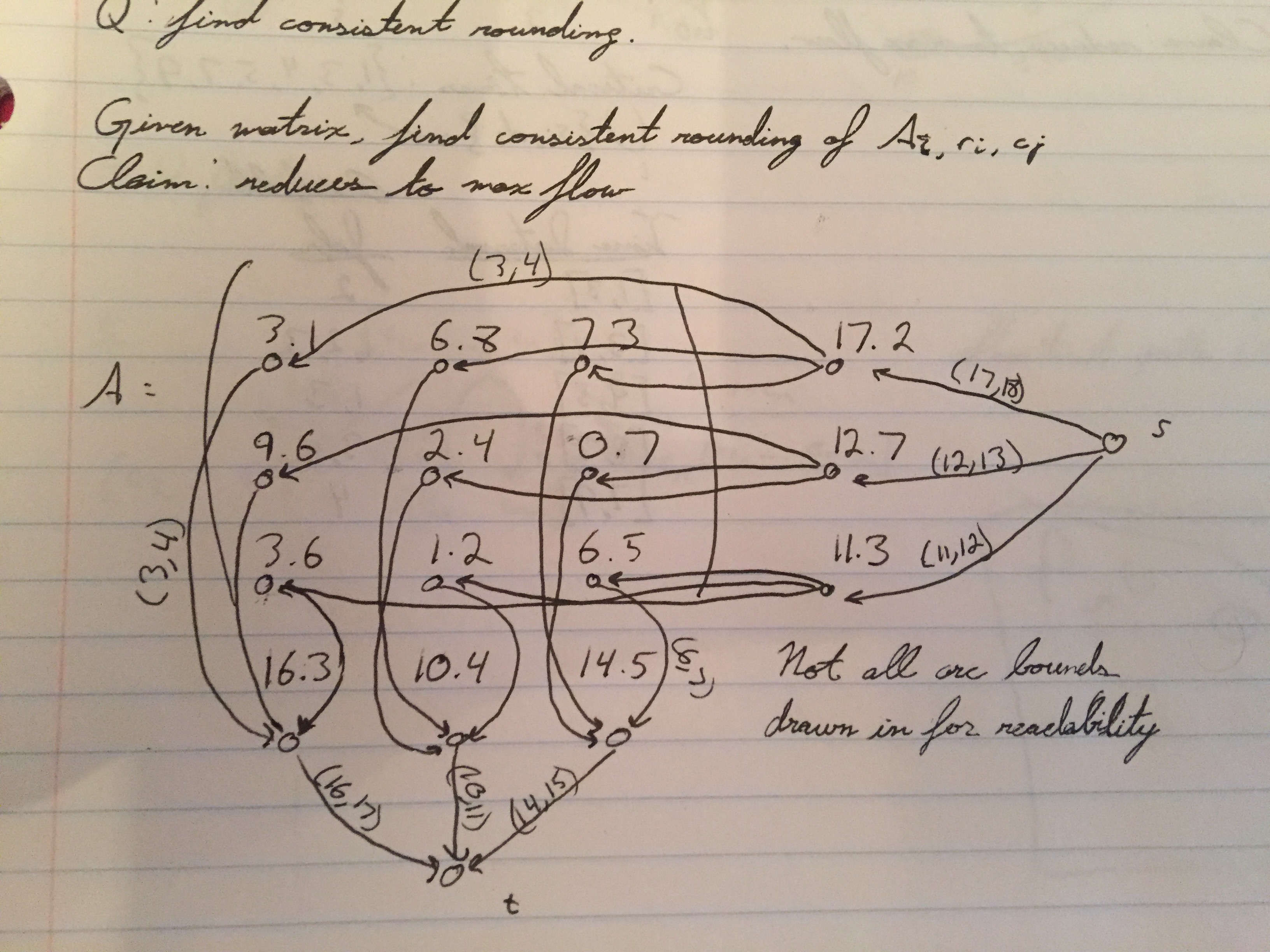

\begin{bmatrix} 3.1 & 6.8 & 7.3 \\ 9.6 & 2.4 & 0.7 \\ 3.6 & 1.2 & 6.5 \\ \end{bmatrix}Where zip codes are along the x-axis, and tax brackets are along the y-axis. Given enough such matrices to cross-reference, a nefarious individual would be able to identify exactly which members of their community are in which tax brackets. So, the agency would like to obfuscate the data in the matrix.

Create a new matrix \(B\) such that \(b_{ij}\) is either \(\lfloor{}a_{ij}\rfloor\) or \(\lceil{}a_{ij}\rceil\) However, \(B\) should also obfuscate the sums of both the columns and rows of \(A\) in the same manner (so, \(\sum_{i}b_{ij}\) should be either \(\lfloor{}\sum_{i}a_{ij}\rfloor\) or \(\lceil{}\sum_{i}a_{ij}\rceil\), and so on, for each row and column).

A ``consistent rounding'' satisfies all these constraints.

Question: Given matrix \(A\), find a consistent rounding. Claim: Reduces to max flow.

See the (hopefully) included image.

Figure 1: (Hopefully) included image.

Let \(r_i\) be the sum of row i, and \(c_j\) be the sum of column j.

The idea is to have a node for each element of \(A\), as well as nodes for the column and row sums. Then, make an arc going from \(a_{ij}\) to \(c_j\), with lower bound of \(\lfloor{}a_{ij}\rfloor_{}\) and \(\lceil{}a_{ij}\rceil\). Also, make an arc going from \(r_i\) to \(a_{ij}\) with the same upper and lower bounds.

Now, make an arc going from each \(c_j\) to a sink node \(t\). Each arc will have lower bound \(\lfloor{}c_j \rfloor\) and upper bound \(\lceil{}c_{j}\rceil\). Similarly, make an arc going from a source node \(s\) to each \(r_i\). Each arc will have upper bound \(\lfloor{}r_{i}\rfloor\) and upper bound \(\lceil{}r_{i}\rceil\).

This is sufficient to formulate matrix rounding as a max flow problem. Note that there is actually a second formulation, which reverses the directions of all the arcs.

22.2 Max Flow Reduction: Job Scheduling

The remainder of class was spent talking about the job scheduling problem, which appears to be described in full on the notes for 2015/10/09.

23 2015-10-05 Monday

23.1 Max Flow

def Max flow: G = (V, E), with \(\forall (i,j)\:u_{ij},\text{zero costs, supplies, and demands}\). One edge going from source to sink with no upper bound, -1 cost. The optimal solution will maximize the flow from source to sink.

23.2 Feasible Flow Problem

def Feasible flow: Given a network with supplies and upper bounds, show that a flow exists.

Claim: Feasible flow reduces to Max flow.

Steps:

- (remove lower bounds, if needed)

- from all sinks, put edge to t with \(u_{it}=-b_{i}\)

- to all sources, put edge from s with \(u_{si}=b_{i}\)

- set supplies/demands of all nodes to zero

- connect t to s, -1 cost, no upperbound

- Solve max flow. If all upper bounds met going into t / out of s,

(i.e. final supplies/demands are 0) then there is a feasible flow.

23.3 Reductions

To prove a reduction from one problem to another, show a 1-to-1 mapping between solutions to each problem.

Ex. Show feasible flow reduces to max flow: Proof: (\(feasible flow \Rightarrow max flow \)

- Let x be a feasible flow. Let \(y_{ij}=\begin{cases}x_{ij}\text{, if}(i,j)\in E \\ u_{ij}\text{, if}(i,j)\notin E \end{cases}\)

- We know the value of the max flow \(\le \sum u_{si}\) and the value of the y flow \(≤ ∑ ysi = ∑ bi \mathbb{1}bi ≥ 0 ≥) value of max flow. So, If val(max flow) == val(y flow), y flow is optimal.

24 2015-10-02 Friday

MCNF: special cases and applications

Taking problems and mapping them to MCNF is preferable because:

- MCNF is faster that LP

- MCNF is unimodular, so you can drop integrality constraints.

- This makes it attractive. How can we solve other problems with it?

24.1 Shortest Paths

Given:

- directed graph \(G=(V,E)\)

- \(s \in V\)

- lengths \(c_{ij}\) associated with \((i,j)\in E\).

Find the shortest path from \(s\) to \(u\). \(u \in V - \{s\}\).

I feel like after EECS 340, I don't really need to copy down the graph we're using for demonstration. So, shortest paths are a special case of MCNF.

- Set costs to the lengths of the edges.

- Lower bounds 0

- Upper bounds infinite

- Set source node supply to \(|V-\{s\}|\), and all other nodes to \(-1\).

If you get fractional solution for a single solution, you could use either flow. Note that people really don't try to use MCNF to solve shortest paths; rather, they sometimes use repeated Dijkstra's algorithm to solve MCNF (instead of LP).

- \(\min \sum_{(i,j)\in E} c_{ij} x_{ij}\), s.t.

- \(\sum_{(s,j) \in E} x_{sij} - \sum_{(j,s) \in E} x_{js} = n - 1\)

- \(\sum_{(i,j) \in E} x_{ij} - \sum_{(j,i) \in E} x_{ji} = -1\) for \(i ∈ V-\{s\})

- \(x_{ij} > 0\), for \((i,j) \in E\)

The dual:

- \(\max (n-1) \pi_s - \sum_{i \in V-\{s\}} \pi_i\), s.t.

- \(c_{ij} - \pi_i + \pi_j \ge 0\), for \((i,j) \in E\)

- π's unrestricted.

Let's look at the objective function and make a change of variable:

\begin{equation} (n-1) \pi_s - \sum_{i \in V-\{s\}} \pi_i = n \pi_s - \sum_{i\in V} \pi_i = \sum_{i \in V} (\pi_s - \pi_i) = \sum_{i\in V} d_i \end{equation}So, we change variable to \(d_i = \pi_s - \pi_i\). Above is the new objective function using the change of variable. Now we'll rewrite the whole thing using \(d_i\).

- \(\max \sum_{i\in V} d_i \), s.t.

- \(d_j \le d_i + c_{ij}\), for all \((i,j) \in E\)

The intuition is that \(d_j \) is the distance from the source to node \(j\). We are maximizing, which seems funny, but it makes sense when you realize that the constraints are holding back the whole thing. The constraints here are the Bellman-Ford optimality conditions, which are what Dijkstra's Algorithm does at every step (and of course the Bellman-Ford algorithm).

24.2 Dynamic Programming!!!!!

Dynamic programming is an algorithm design technique, not an algorithm in

itself. However, we're going to make a sweeping claim here: "all dynamic

programming is a special case of network flow". (say-whaaaaat.gif)

Figure 2: Say Whaaaaat?

Weighted Interval Scheduling: You have \(n\) intervals \((s_i, f_i), v_i \). Find the subset of non-overlapping intervals with maximum value.

Solution: \(M(i)\) is the maximum value from scheduling intervals 1, 2, …, i. We want \(M(n)\). We do know that \(M(0) = 0\). Recursively:

\begin{equation} M(i) = \max \left\{ M(i-1), v_i + M(p(i))\right\}\ \end{equation}Here, \(p(i)\) is the first predecessor interval that doesn't overlap with \(i\). The two conditions are either (1) we don't choose interval \(i\), or (2), we do choose interval \(i\). In the solution algorithm, we keep a vector of \(M(i)\) values and compute it from \(0\) up to \(n\), and that value is your optimal value.

| 0 | 1 | 2 | … | p(i) | … | i-1 | i | … | n |

| 0 | M(p(i)) | M(i-1) | M(i) | M(n) |